Databricks customers already benefit from flexible, unified and open analytics for AI solutions at scale. However, they may want to speed up secure data access while remaining compliance ready. Databricks data scientists and data analysts want to use data quickly and efficiently, increasing time-to-value from their data.

Sensitive data is often interspersed within large data lakes. It is necessary to ensure that all sensitive data is identified and then access controlled so enterprises can remain secure and compliant. This can often come at the expense of productivity.

Controlling access to data is difficult and time consuming. Data teams responsible for the success of data projects and security and access control often spend between 15-30% of their time on these tasks.

Satori streamlines and simplifies the process of data access for Databricks users. Using Satori, data teams can quickly and easily implement the required security policies. Satori integrates with Databricks Unity Catalog with key features such as user management, access controls, and audit logs.

The integration with Satori enables Databricks users to take advantage of all of the useful and generative AI tools but to do so securely and scale this usage across multiple teams.

Overview of Data Access Controls with Satori

Data scientists, analysts and engineers connect to Databricks data through various BI tools, or through the Unity Catalog to leverage AI and increase productivity.

The Databricks Unity Catalog, which governs access to the different meta stores, requires customers to migrate their metadata to the Unity Catalog before using it. Once the metadata is successfully migrated, Satori enables data protection via the Unity Catalog so that any Databricks user can securely communicate with their Databricks SQL stores.

The following outlines how Satori secures data access for Databricks users. The following sections elaborate on each of these points.

- Once a Satori Datastore is connected, Satori’s Posture Manager automatically scans, locates, and classifies all sensitive data across all Databricks workspaces and Metastores.

- The Satori Administrator and/or the data steward define the security policies/access controls on the management console.

- Users access data either through Satori or from Databricks itself with the applied data access controls.

- Satori provides the audit logs from all user access.

Now, let’s take a look at how this works in practice.

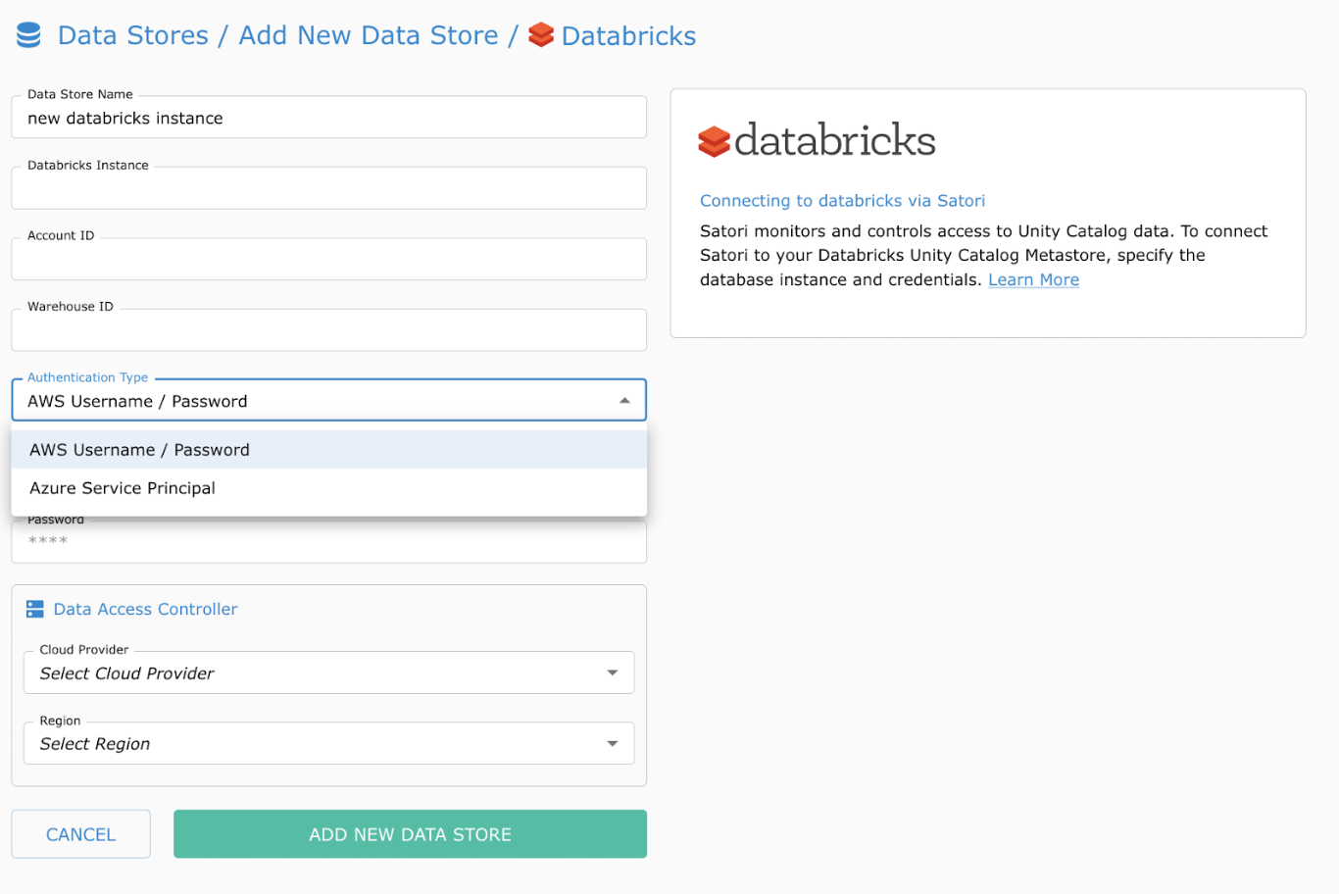

Prerequisites: Connect the Datastore

A Satori dataset is a collection of data store objects such as tables or schemas from one or more data stores, that you wish to govern access to as a single unit. In Databricks, these objects are Unity Catalog schemas and tables.

The first step to controlling access is to set up a new Satori Datastore connection to Databricks. For more details, take a look at our documentation:

Once you connect successfully, Satori’s Posture Manager automatically scans your Unity Catalog for all sensitive PII data and its locations.

Satori is now immediately available to protect your locations, create access rules and show audit results for this datastore. Let’s look at setting up the data access controller.

Data Access Controller

Setting up the data access controller occurs in two simple steps.

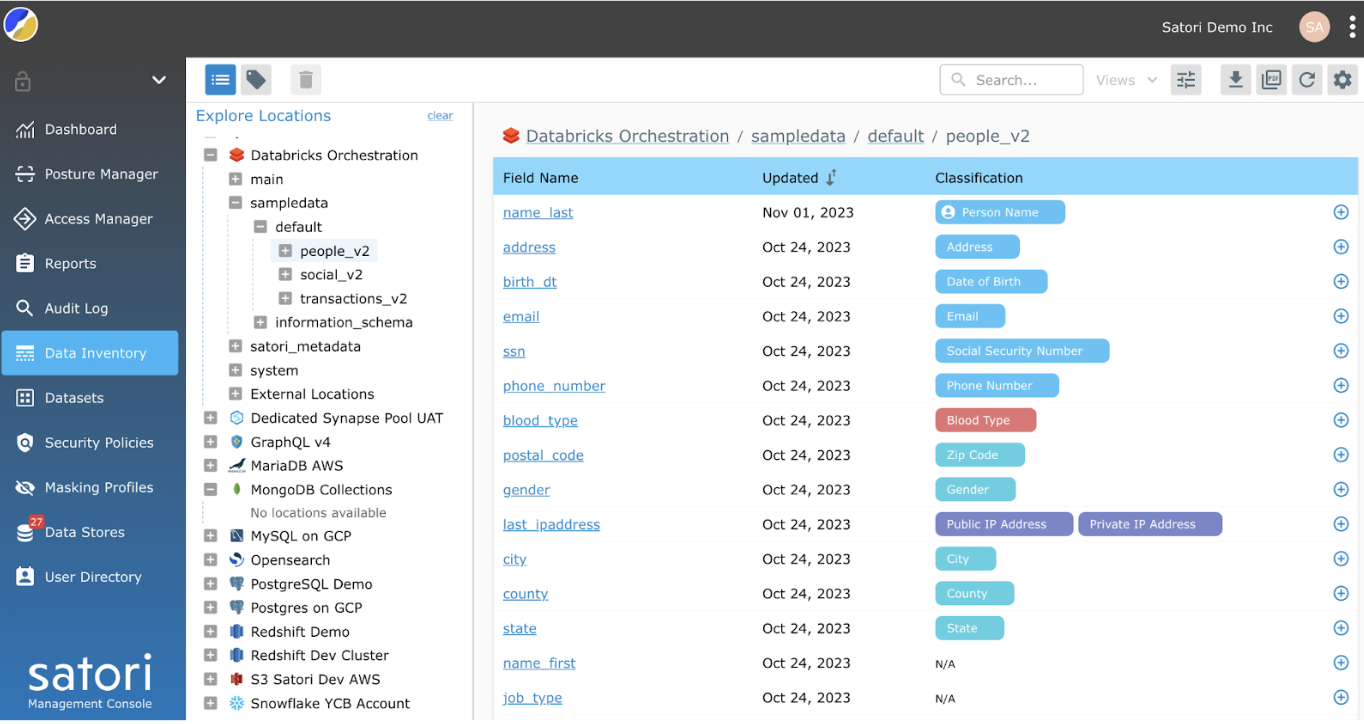

1. Satori Data Inventory & Classification

Satori scans all the data to locate sensitive information and automatically classifies sensitive data based on pre-existing classifiers.

In this example, on the Satori Data Inventory page, Satori automatically scanned a table called people_v2 and classified several different columns.

Instead of using the predefined classifiers, columns can be updated manually with alternative classifiers. In this example, the data steward wanted a different classifier for name_last so they changed the tag with a Satori Classifier Person Name.

You can tell which classifications have been manually updated by the appearance of an icon next to the classifier as seen on Person Name.

2. Defining the Security Policies

Before granting access to the people_v2 table the data steward defines the security policies for the Datasets and users. This step is typically performed within the Satori management console.

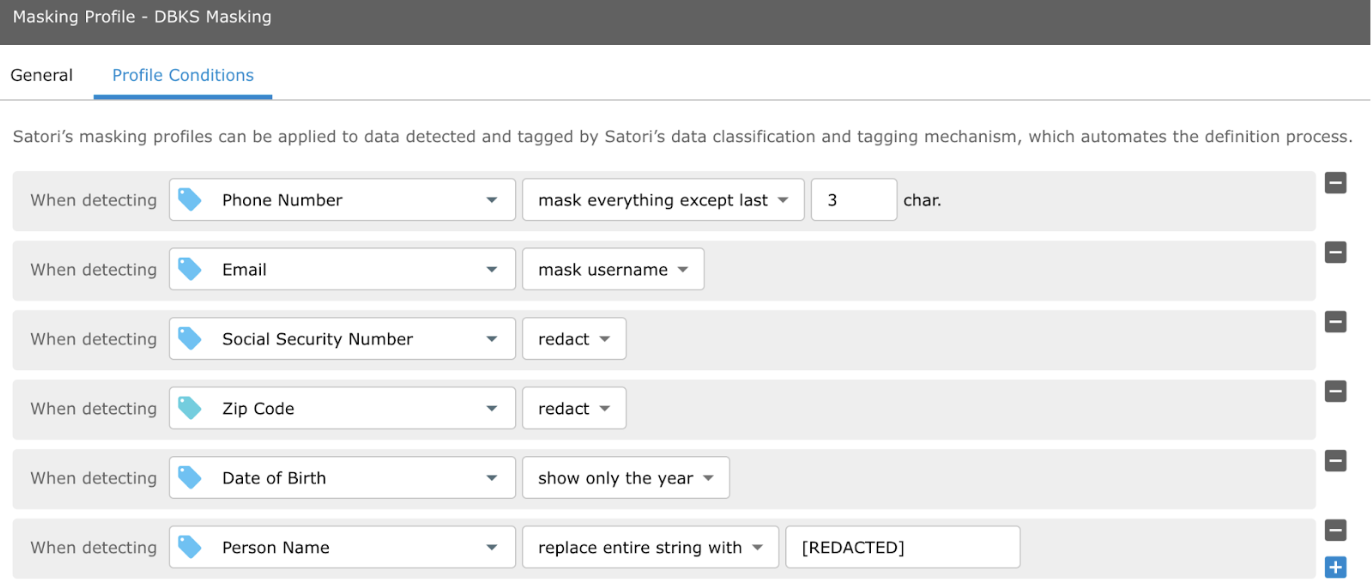

In this case, the data steward defines different masking rules in the Satori management console.

However, at runtime, the data traffic flows directly between your Databricks Instance and your various client tools – not through Satori at all!

How Satori Works with Databricks

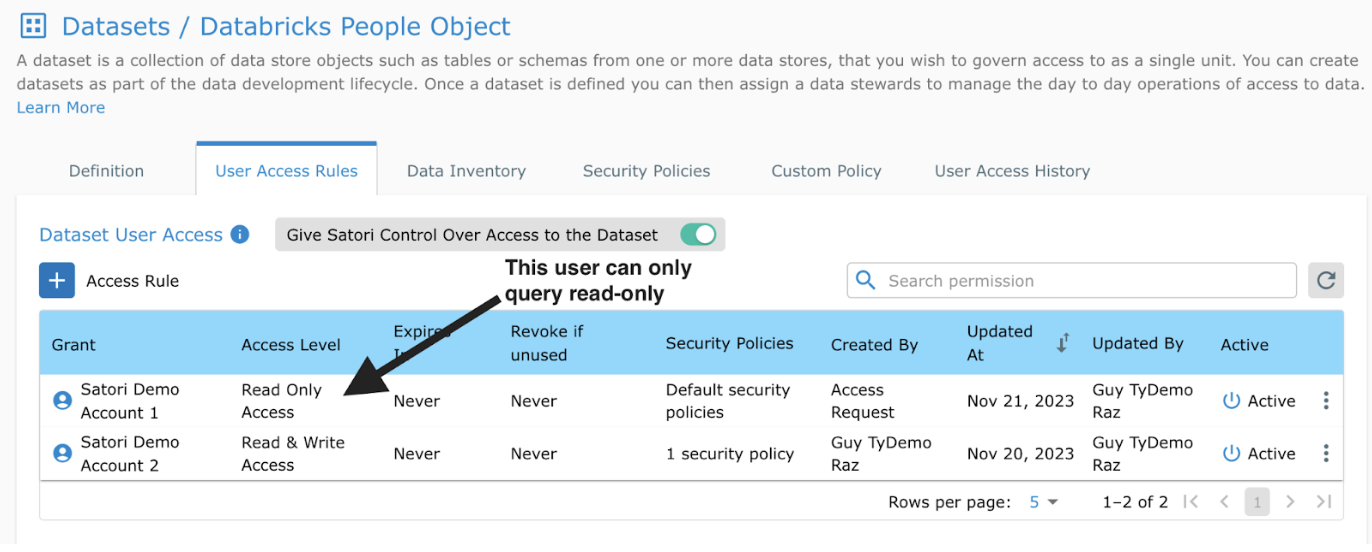

The data steward applies different security policies and masking requirements for different users to ensure that each user only receives access to the data for which they are authorized.

In the following example, the data steward enabled access to the people_v2 table. Demo User 1 is given default security access. Based on Demo User 1’s characteristics, they are given read only access.

In contrast, the data steward provided Demo User 2 with both read and write access.

So now, when users request access to this data, they receive data with the applicable security policies and masking applied.

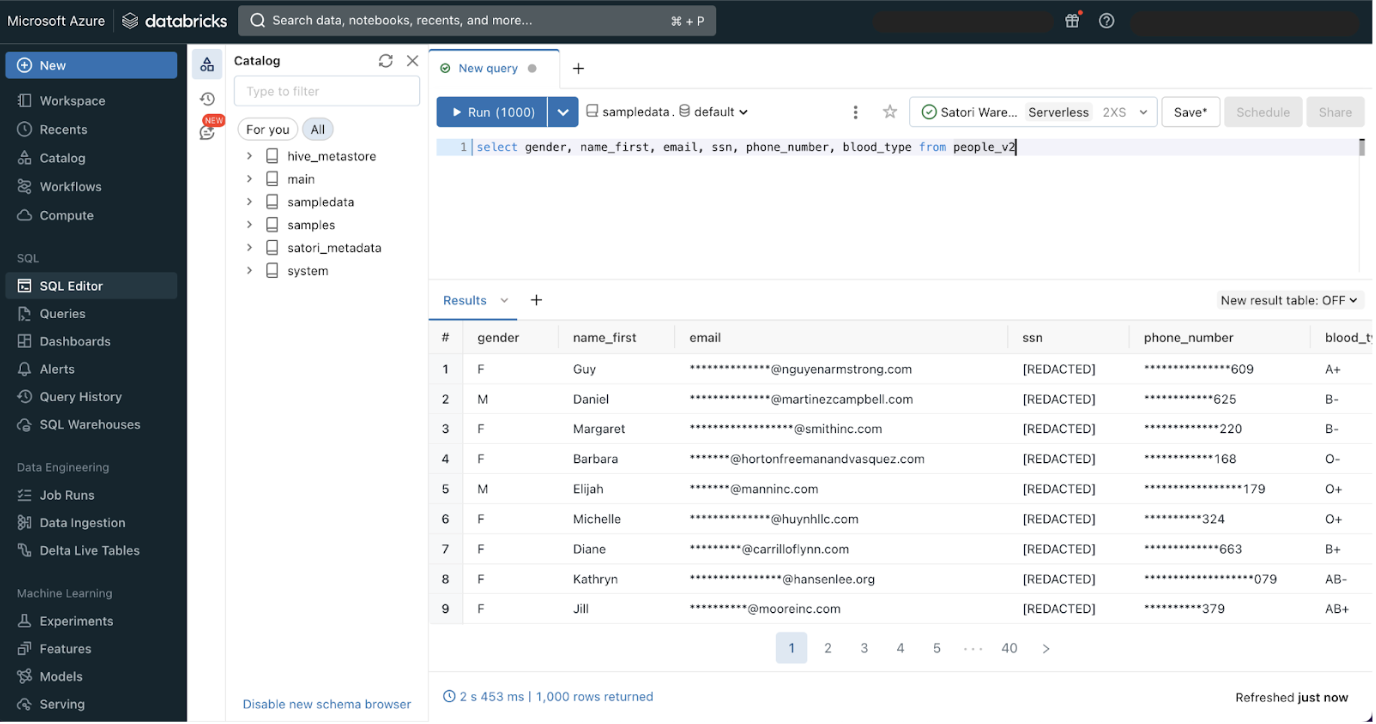

For example, Demo User 1 runs a Databricks query, the default policies as defined in the previous section are applied, and the appropriate PII information, email, SSN, and phone numbers are masked.

To run the query, the user can use the native Databricks UX, or any other client or BI tool to connect to the Databricks Unity Catalog, and the experience is identical.

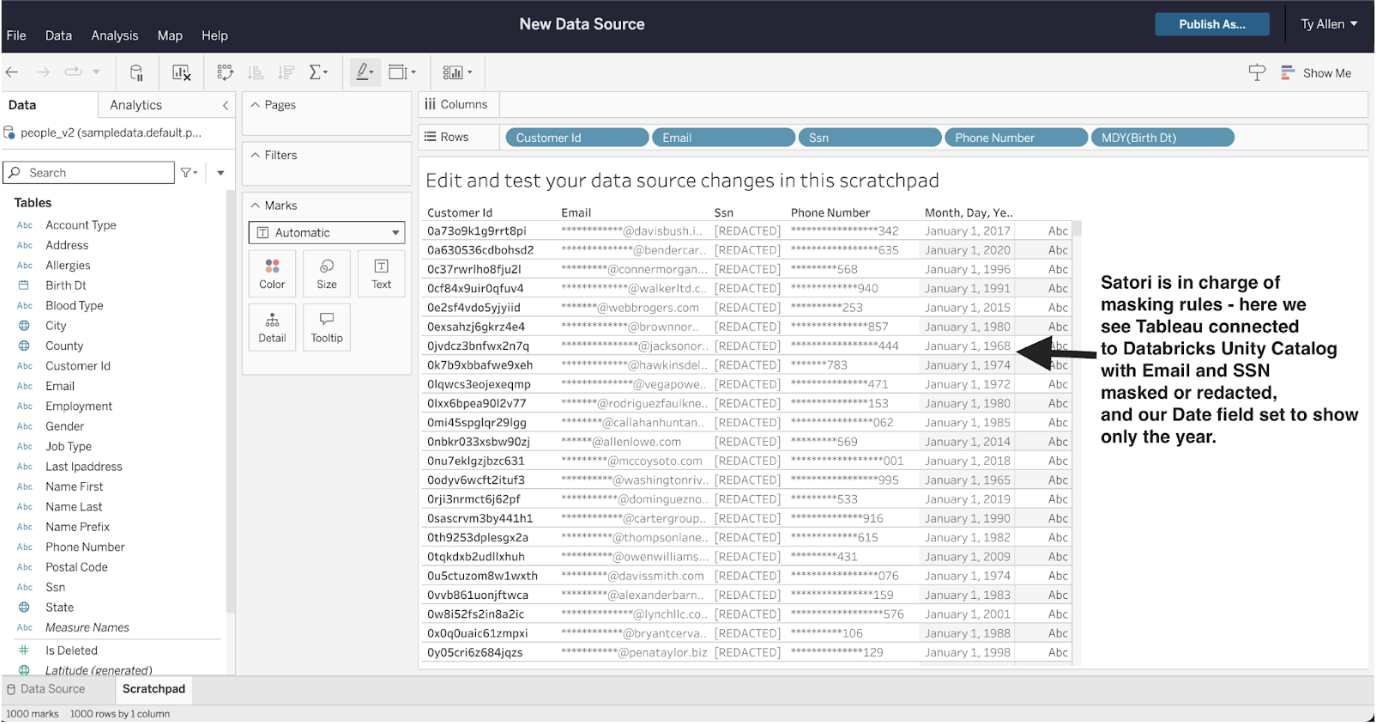

No matter what client tool is used, the rules defined in Satori continue to apply. This example shows the connection through Tableau, with the same table as above. As we can see the results are the same:

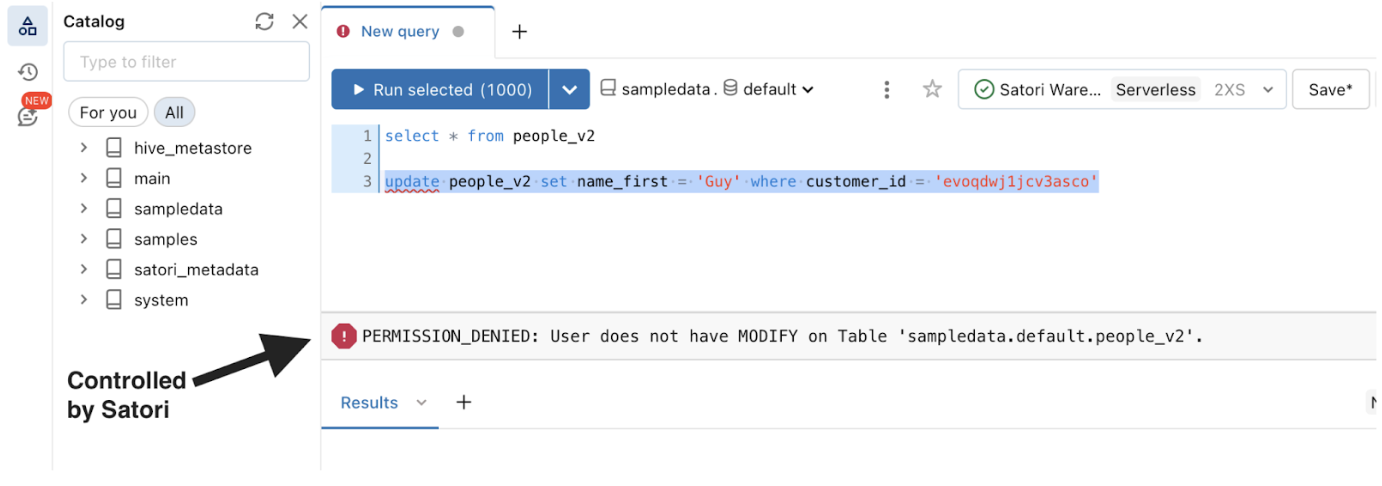

Because Demo User 1 has read only access, if they try to write back or edit data, their request is denied.

Satori blocks access to Demo User 1 based on the configuration of access rules already defined for this data location. The implementation of these rules occurs in the Databricks Unity Catalog workspaces as Databricks receives this information and enforces the rules:

Auditing & Compliance

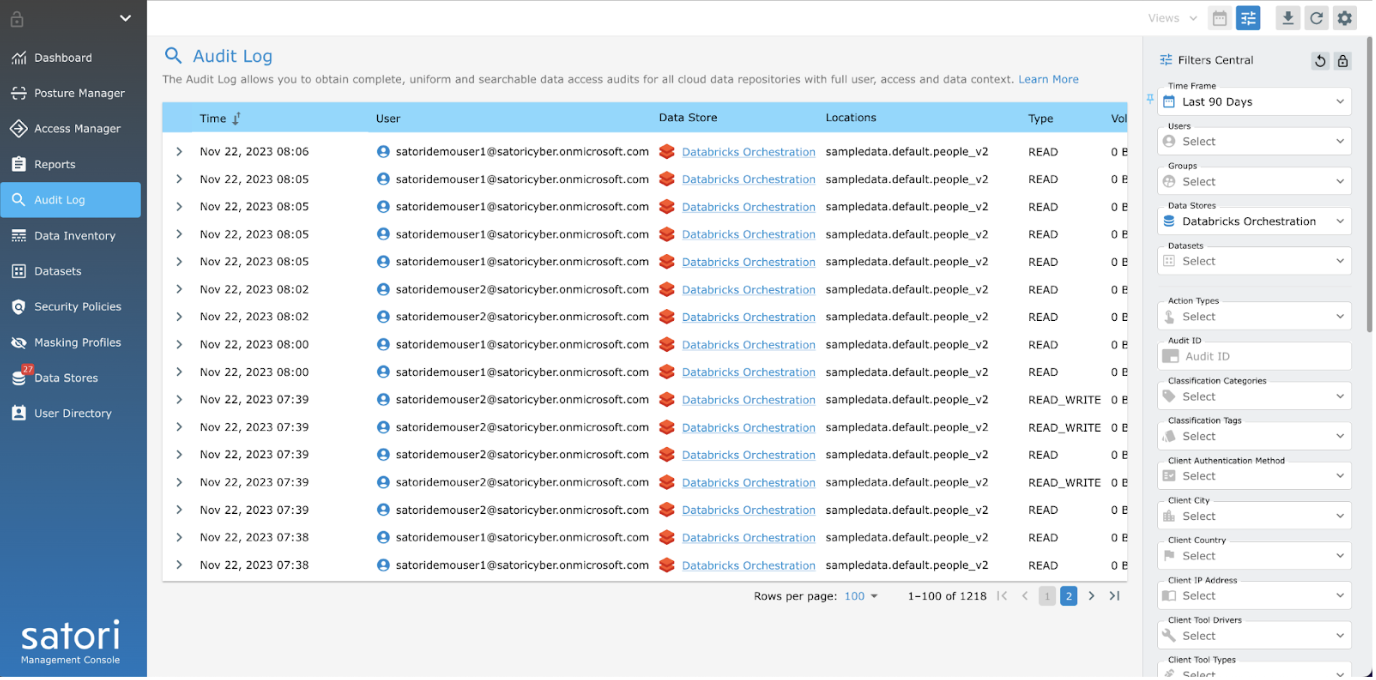

To further ensure the security and monitor who has accessed what data, all queries against the Unity Catalog are recorded and written back to Satori’s audit log.

The extensive auditing logs provide detailed information about actual data usage so that Databricks users can remain compliance ready.

Conclusion

Satori provides a unified governance solution so that sensitive data remains secure within Databricks analytics and AI platform. By integrating Databricks with Satori’s management console, data stewards can implement data access and masking rules. These security policies are then applied on all Databricks queries regardless of whether the user goes through Satori or Databricks Instances, and they are also applied through various BI tools such as Tableau. The ability to quickly and easily implement security policies provides security for sensitive data, while allowing users to gain faster time-to-value from their data.

To learn more about how Satori can enhance data access to your Databricks analytics and AI platform, set a 30 minute meeting with one of our experts.